XML, JSON, trees and LISP

This post is a run-through of some of my thoughts around the formats we surround ourselves with, when developing networked systems - especially on the web but elsewhere as well.

As you may have realized, my web site is antisocial. I do not have a comments section and this is a deliberate choice. If, however, you wish to provide insights or factual corrections, I very much welcome them via e-mail.

Trees... it's all about trees

A web page is, behind the scenes, a DOM tree in the rendering engine of the browser. Usually when we want to serialize that tree, we serialize it to XML. This is not because XML is in some way superior to other representations, it is more an accident of history.

Back in the very early days of the web when HTML was created, it was created solely for markup and did not describe formal tree structures. For example, interlacing elements were allowed; <b>this <em>was</b> valid;</em>.

During the '90s browser wars, one of the things that came out of the standardization effort was that someone (W3C I suppose) realized that transforming HTML from very unstructured markup into a stricter structure and giving it a new name with some resemblance to the original, would probably be necessary if anyone were to ever produce standards of what browsers should support and how they should do it. Thus XML was born.

XML is a properly strict specification with clear rules of what is allowed and what isn't, very much unlike the days of ad-hoc HTML. In XML, interlacing elements as in the previous example, for example, are not allowed. Unlike the early HTML, XML only allows for actual tree structures - for example:

One example, of course, is S-expressions. These date back to the 1950s, but they are every bit as fine for representing trees as XML is - the same tree would look like this;

At the time of the inception of HTML, nobody could of course know where HTML would eventually go; I am not saying that Tim Berners-Lee and his associates did not do well, or that W3C did a poor job of formalizing HTML into XML - but it should be clear that if your goal is to serialize a tree structure into readable text, XML is a far stretch from being compact or easy on the eyes. And it is indisputable that more compact representations predate XML by at least three decades.

So what's in a tree anyway?

Trees hold a lot more than just DOM structures. Any of the programming languages we use get parsed into a tree structure when we pass our source code to the computer (for compilation, interpretation or otherwise). An AST (abstract syntax tree) is one such tree structure. Let's cook up an example; a conditional statement that performs one of two operations based on a test:

This could be written in C++ as:

But wait... If that is just a tree... could we write the code i XML too? I'm glad you asked!

Now that clearly doesn't make the code more readable. I guess this explains why Bjarne didn't pick XML for his "C with Classes"... But how about if we chose a more compact representation, say, like S-expressions again?

How about that? Now both the C++ and the S-expression representations of the tree are a lot shorter than the XML representation. However, the C++ representation uses a rather intricate syntax; notice how a surrounding parenthesis is used to group the first argument to the if (the test), a semicolon is used to separate the second argument (the positive) from the else and the third argument (the negative). Finally, a semicolon is used to group this conditional expression from any expressions that may follow.

In comparison, the S-expression representation uses a surrounding parenthesis to group the expression - this replaces the ending semicolon from the C++ example. But every argument to if is simply separated by a space; this is why the S-expression is considerably shorter than the C++ example (and completely consistent in its syntax). Anyway this is not about measuring lengths of expressions (or anything else), it is merely about giving examples of the various ways in which we represent the tree structures that we surround ourselves with.

Anyone remember PHP?

So back in the days with "Personal Home Page", the HTML you wrote could be extended in clever ways with actual code that would be executed by the server on which your web site ran. This was almost really clever; since HTML lends itself to extension (eXtensible Markup Language - it got that name for a reason) simply by adding your own elements, PHP offered the HTML author an elegant way to escape from the HTML (that would become the DOM tree in the users browser) into the world of in-server processing.

Top points for elegant extension here - the contents of the <?php> element in the document is simply evaluated on the server. Beautiful. But... what for consistency? Both the to-be DOM data (the static HTML) and the PHP code are tree structures, and one is a sub-tree of the other. Why do we use separate representations?

Looking at our previous attempt at representing the simple conditional in XML, I think it is quite clear why Rasmus didn't pick XML representation for his language either. Let's try it anyway:

Yuck. So given the choice, I think it is clear why they chose inconsistency over the alternative. But... What if the web wasn't HTML? What if... say... Tim had used S-expressions? Let's try:

Well, be that as it may - it's probably a little late to change the web from XML to S-expressions. But anyway, this example is a useful primer for what comes next.

Oh... and in case you're thinking that the four ending parenthesis look scary, don't worry. Any decent editor will do parenthesis matching and any decent developer should be using a decent editor. Trust me when I tell you, that sequences of parenthesis is not a practical problem when developing using S-expressions; it looks like trouble I know, but in the real world it just isn't.

Escaping XML - JSON to the rescue?

The JavaScript community has long worked with XML by means of the tight DOM integration in the language. Sometimes they would work with textual XML, other times they would manipulate objects in the DOM directly. The XMLHTTPRequest method performs an HTTP request and provides, as the name implies, immediate access to an internal representation of the XML response received.

No developer should ever have to write XML; developers will produce and manipulate tree structures, and if needed those can be serialized to XML or parsed from XML; but there should never be a situation in which an actual person sits down and types actual XML. XML is just too inconvenient, too verbose and too silly, for a human to type.

It appears that the Javascript community discovered that rather than working with XML documents, it would be more convenient to write plain JavaScript and use the eval() function on the receiver side to parse this. Once again, I have to award top points for consistency - using only JavaScript rather than using XML as well (one language is usually better than two if you have any amount of code and people on your project). Obviously of course, it wasn't long before the security implications of executing eval() on code received from foreign systems was realized. Today, the valid subset of JavaScript that can is sent between systems is called JSON, and it is no longer parsed using eval(). So far so good.

Inside a pure JavaScript system, JSON thus makes some sense as a textual representation of a tree structure. But in the effort to avoid the security problems of arbitrary code execution from remote systems, the JSON syntax is a far stretch from full JavaScript - therefore, as with the C++ versus XML code sample, JSON suffers from the same deficiency which causes the limited cumbersome syntax to become very verbose for representing arbitrary trees. An example is in place.

Let's take the original C++ example;

Now let us try rewriting this into JSON:

While shorter than the XML representation it's still a long way from the briefness of the original C++ or the even shorter S-expression. Again, beauty is in the eye of the beholder, I shall refrain from commenting on what the JSON looks like.

This example is not as contrived as it may seem on the surface. There are indeed real-world projects that serialize their languages into JSON - one such example is the ElasticSearch Query DSL.

In all brevity, JSON does not bring anything to the table that XML or S-expressions didn't do already. It is slightly shorter than XML but it's clearly not the shortest representation. You can't possibly argue that it is significantly prettier than the others (I'll go as far as to agree it's different). It's not more (or less) efficient; it requires a specialized parser like anything else. It was never structured the way it was for any of those reasons either - JSON today looks like it does, because it's a valid subset of JavaScript.

In other words; JSON was never intended to be brief. It was never intended to be efficient. It was never intended to be easily readable. It was never intended to have any other particular quality one might wish from a tree representation language; it is what it is, because it is a valid subset of JavaScript.

The story is the same with XML of course, with XML not being designed from the ground up but looking like it does because of its HTML predecessor. So don't take this as a knocking of JSON in particular - all I'm saying is, JSON is no better than XML for all intents and purposes - and therefore in my view it is useless. It simply doesn't bring anything to the table that wasn't there already.

So is everything just bad then?

I'm glad you asked! No, of course everything isn't just bad. And this post isn't meant to make it sound like I think all is bad. I am simply trying to make you think about trees and how we represent them in writing.

I have been working quite a bit with XML data exchanges lately, and I have been fortunate enough to be able to do much of that work in a language that is itself an S-expression; namely LISP. This has been an interesting experience that has brought back memories from the days of PHP, but with actual elegance and consistency. It's almost too good to be true - and it's definitely good enough to share.

As we established earlier, XML and S-expressions are two of the same - they are simply representations of tree structures. XML is nasty to write though, so before doing anything else, I built an XML parser and an XML generator; two procedures that would convert a string of XML into an S-expression, and convert an S-expression into a string of XML. Like this:

Now hang on - how's that for consistency? LISP is homoiconic; the language itself is represented in a basic data type of the language. Or the code looks like the data, to put it in another way. While perhaps confusing to the novice, it's inarguably consistent. With the ability to construct our tree structure and then later convert it to a string of XML, this opens up for some very convenient XML processing.

Consider, for example, an API endpoint handler that must return an S-expression which the HTTP server then converts to XML:

Is that sweet or what? Executing this code we get:

Ultimately, when the HTTPd executes the api handler code it will also invoke the S-expression to XML conversion. So what is executed is of course more like:

Notice how my static document data (the status outer document and the version, api-time and db-time subdocuments structure) are written and how function calls and variables are trivially interwoven (by means of the comma operator). Building up large and complex tree structures from LISP is, in other words, a comparatively nice experience - and turning the S-expression to XML is trivial as already demonstrated.

Where do we go from here?

Where we can go with this exactly, I'm not sure. Doing XML API work in LISP is an absolute joy, that much I can say. The verbosity of S-expressions is minimal and I think the actual implementations are usually really elegant - having the language itself be an S-expression provides a level of consistency I am not used to from other languages.

I think it would be interesting to look into generating asm.js from LISP, as a way to efficiently deliver web applications but being able to write them in a more elegant language. Imagine that; having a server-side API server and the actual browser-side web application all developed in a language as elegant and mature as LISP. Certainly this would be a welcome contender to the current Node.js movement where we attempt to use the most inelegant language ever (hastily) conceived to run both the browser and the server.

Getting back on line

It's been quite a while since my last post - time flies when you're having fun. A lot has happened, but I had not taken the time to write about any of it until now. I'm going to try to get back into the habit of writing here. Here's some headlines...

An ode to the thin client

I've been an extremely happy user of the Sun (now

Oracle) SunRay thin clients; both running with on Solaris- and

Linux-backed terminal servers. I have up until late last year used a

dual-head setup with two SunRays (one driving each monitor) since

around 2002 or such. During those 13 years, the back end servers

have been upgraded in various ways - ultimately, we ended up with

virtual Linux servers running in a lage VMWare cluster backed with

Oracle ZFS appliances. So my "desktop computer" (the SunRay server

in the datacenter on which my applications ran) had more cores,

memory and disks that would fit under and over the desk in my

office.

I've been an extremely happy user of the Sun (now

Oracle) SunRay thin clients; both running with on Solaris- and

Linux-backed terminal servers. I have up until late last year used a

dual-head setup with two SunRays (one driving each monitor) since

around 2002 or such. During those 13 years, the back end servers

have been upgraded in various ways - ultimately, we ended up with

virtual Linux servers running in a lage VMWare cluster backed with

Oracle ZFS appliances. So my "desktop computer" (the SunRay server

in the datacenter on which my applications ran) had more cores,

memory and disks that would fit under and over the desk in my

office.

The single big downside to this setup was video (as in full screen motion picture) performance. Everything else worked very well indeed - the SunRays accelerated 2d operations just fine so regular screen updates, scrolling, moving about and browsing the web was absolutely fine. Since my job isn't watching full screen video, this one limitation in the setup was not really a problem (sure, on fridays when a colleague links to a funny youtube video, it can be annoying to have to view it windowed rather than full screen - but really, this was the one downside for me). All in all, while we all like full screen video, I could perform my professional duties on this rig just fine.

There were some significant upsides to the setup - among the big ones I should mention: I had an identical setup at home - so all I had to carry from and to work was a small smart-card, my user session would follow the smart-card. Sometimes I run to work (it's a nice 10k jog), and all I needed to carry was a smart-card. No laptop can compete with that, however lightweight.

Also I never needed to worry about backups and hardware upgrades. My "desktop" computer was hooked up to a large storage system in the datacenter and other people would manage that - no unreliable or slow or too-small disks for me to worry about.

Anyway - since apparently everyone else (even nurses and doctors in hospitals) must be able to play full screen youtube while at work, the thin client has been discontinued by Oracle. In my opinion this is a shame - not so much that they discontinue the one true thin client product that existed (the X terminals died long before this) - but that IT people around the globe failed to realize the enormous savings a true thin client architecture does indeed provide in workplaces where thin clients are adequate graphically and where the ever-alive session provide massive savings in man-hours (saving personnel from starting and logging on to 5-8 different applications like they do in most hospitals in this country for example, every single time they need to enter a single data point about a single patient).

I can work with a lot of things, but I can't work on a discontinued

product for many years - time to move on. I need a UNIX workstation,

I want something that "just works", I want to be able to carry it

around (bring it home now that my session can't travel with me any

more) and while watching full screen Youtube still isn't part of my

job, it would be great to be able to do that too (actually, CSS

transitions on modern web could take a toll on the SunRay video

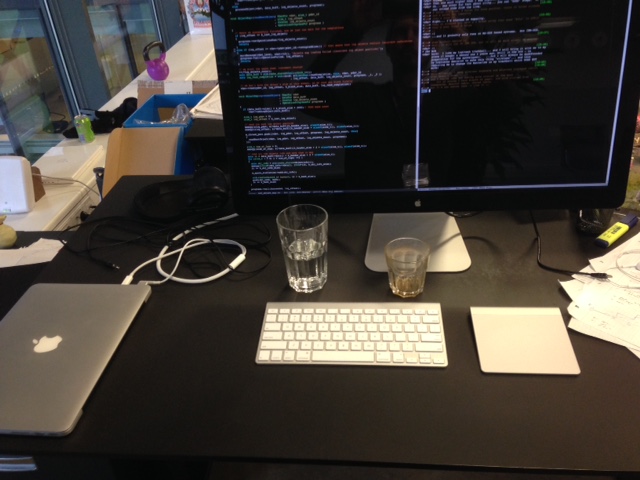

performance too). So I chose a Macbook with a Cinema display for the

office, as well as a bluetooth keyboard and trackpad. This way, when

at the office I have a big screen and a normal keyboard and trackpad

- when at home, I can work from the laptop.

I can work with a lot of things, but I can't work on a discontinued

product for many years - time to move on. I need a UNIX workstation,

I want something that "just works", I want to be able to carry it

around (bring it home now that my session can't travel with me any

more) and while watching full screen Youtube still isn't part of my

job, it would be great to be able to do that too (actually, CSS

transitions on modern web could take a toll on the SunRay video

performance too). So I chose a Macbook with a Cinema display for the

office, as well as a bluetooth keyboard and trackpad. This way, when

at the office I have a big screen and a normal keyboard and trackpad

- when at home, I can work from the laptop.

I have not yet run to work after making this switch; clearly a 13" macbook is going to be a lot heavier than the smartcard was. We'll see how that goes... But aside from that, I really don't have any complaints. Emacs works in full screen mode just as well as it did on the SunRay, and that's where I spend most of my day anyway. Finally, I do love how full screen Youtube plays on this new rig.

Learning LISP

The second big thing that happened to me since last update is that I'm now doing a significant part of my professional work in Common LISP. Yup that's right - the language that was invented (or "discovered" if you will) in the late 1950s. I guess that deserves some explaining too.

I was faced with the challenge of having to run some "business processes" for a cloud service we're building. I've seen these things being built many times before and I know how it goes: Someone needs a mail to be sent out in some particular situation - so one developer hacks up a piece of Perl to do that and runs it from cron. Now someone needs to pull a report of some numbers, so another developer cooks up some Python to compile the numbers and a shell script to feed the output to the right location. And so it goes... Each of these individual processes is seen as too small or insignificant to warrant a properly structured approach and before you know it, you will have developed both a messaging, reporting, billing and operations automation system using at least five different languages (bash, perl and python (probably at least two incompatible versions of python) happen within weeks, then follows a java service (everybody needs those) and that guy who learned Ruby needs to use that and then the web developers figured they could write stuff in JS and run it under Node.js). Look me in the eyes and tell me that's not how it goes. Every. Single. Time... Not out of ill will, but because everyone wants a quick solution to a simple problem. Unfortunately, 10 quick solutions to just as many simple problems isn't quick at all and ultimately isn't really a solution.

So we don't want that. I've implemented a complete automated billing and invoicing system in PL/PGSQL in the past - that was a great experience but I don't want to do that again either (lessons learned). I've also implemented such services in C++ - and while that's my main language, I just really don't think this is a productive experience. The abstractions you can do in C++ are fine, but still, for such diverse jobs as mailing, accounting, reporting and communicating with dozens of systems, I find that in C++ I end up having to type a lot (regardless of the number of libraries I may be able to use). Worse, the continuous testing and incremental refinement of a big distributed and inherently parallel system using the "edit compile run" cycle is terribly inefficient. A massive focus on unit testing will help here, but it will not cure the fundamental problem, that re-starting the full application on every change you need to test there costs a lot of time, every time, all the time.

I was seriously looking at other languages - including the hypes of the day (Ruby and Python as far as I remember). But the thing is; these languages are so close to all the other languages. There's nothing you can do in these languages that you couldn't reasonably do in either Perl or C++ too. Sure, you can pull out some contrieved example that would demonstrate some superiority - but you would not be able to solve most real world problems significantly more elegantly, or be able to develop the solutions much faster. All these languages are the same... They look the same, and they fundamentally build on the same ideas; pile a fixed set of features into the language, add libraries for commonly needed functionality outside of that. And that's it. And everyone uses the old "edit compile and run" cycle. Is that really the best we can do?

It turns out, it's not the best we can do. LISP has two absolutely huge benefits over "all the other" (non-LISP) languages:

- You do not work in "edit compile run". Instead, you write your application while it is running.

- The language is extensible in ways you cannot imagine until you try it

- You can develop software faster because the process is much better - you no longer restart your entire application every time you need to test something; you can actually write half a function and test it

- Objet orientation was added as a "library". Pattern-matching can be added as a "library". Any paradigm that some other language cooks up over time can be added by means of a library - LISP is so powerful that you do not need to change the language - a library can do it. More importantly; you can do it - every day, in everyting you build. Imagine having that power at your fingertips - trust me it is hard to work in the "lesser" languages now

Some time has passed now and we run a good number of important business processes on this LISP system. It was definitely a gamble when we decided to start this experiment - I did not know the language and no-one else on the team did. You won't find many people who know the language - but hey, when hiring C++ developers I generally don't find many people who know that language either (I mean really know it). Compared to the traditional approach where you have a myriad of small components each written in their own language, I far prefer our structured single-language approach here. This system has been a huge success so far - I cannot imagine how much code and how much time had been needed, had we chosen to do this in PL/PGSQL, C++, Python or any other of the "traditional" (but younger) languages.

Unrestful XMLHTTPRequest

The RESTful model for web service APIs preaches simplicity and brings back the sanity into web service design. It has, once again, become acceptable to solve simple problems with simple solutions. I have on several occations been the principal designer behind an internet facing RESful API, and I truly enjoy the power and expressiveness of plain simple HTTP/1.1 as the vehicle that transports requests and responses to and from the APIs.

A little background

I need to point out some obvious things to set the scene for this post. HTTP defines status codes. Every response has one. The ones we all know are "200 OK", "404 Not Found" and perhaps "500 Internal Server Error". But HTTP defines many other status codes - in fact, with a little creative thinking, HTTP will have error codes for pretty much any situation you will run into when building an API. Don't believe me? Take a look for yourself - it is RFC 2616 (yes I know it has been split now, but 2616 is still a good document). The most important aspect is, that when designing an API, you can actually return a real error code in the HTTP response, and you can put a descriptive error message in the body of the response document.

GET /our/stuff HTTP/1.1 host: mine.local ------------------------- HTTP/1.1 403 Forbidden content-length: ... content-type: text/plain Hi there. We simply won't allow people from your side of the internet access to OUR stuff. Sincerely, US

The second killer feature of HTTP is its brutal simplicity and consistency. Every request consists of a request line (GET /our/stuff HTTP/1.1 in the above example), some headers and a body. Every response consists of a status line (HTTP/1.1 403 Forbidden in the above example), some headers and a body. There are very few exceptions to this rule. One such exception, is, that a response to a HEAD request must not include a body - because the whole point of the HEAD request is to do a GET without actually getting the body data. HTTP does however mandate some specific semantics on the methods - a GET request, for example, must be idempotent (meaning, multiple identical invocations of the method must have the same effect as one invocation) where a POST doesn't have to be. This of course makes perfect sense - multiple requests to retrieve a particular document should of course all yield the same result, whereas it would only be natural if the initial creation of a document succeeds while subsequent creations of the same document would fail (with a 409 Conflict error of course).

Fail 1: error codes

When issuing API requests from JavaScript in a browser using XMLHTTPRequest directly or indirectly through the myriad of JS libraries out there, one would like to issue a request and receive the response. This is what the method facilitates - except if the response status code is 401 Unauthorized. If that status code is sent, the browser will intercept the method return and pop up a horific looking pop-up, prompting the user to authenticate. What's the point?!? Sure, if the user himself entered the URI in the browser address bar, it makes sense. But the user should not be troubled with return codes from the internal workings of a JS application running in his browser. If the application wishes to authenticate, it could ask the user directly, or the browser could expose a method for this purpose.

The workaround I devised for this, was to accept a header "authentication-failure-code" which can be set to any integer from 400 to 499. If the API wishes to return a 401 status and this header is set, the API will return the given integer instead of 401. This is a simple way to keep the API clean while providing a workaround to the misguided implementations of XMLHTTPRequest out there.

Fail 2: GET requests

Let us assume that I have an API which can transform a document from a simple mark-down style text, to a full XHTML document with fancy formatting and graphics according to some theme configured in the system. When implementing an editor, I want to execute this API request whenever the user has edited his document, so that we can present an up-to-date view of what the finalized messaeg will look like. Which method would we us for that?

SOME-MEHOD /render/welcome-message HTTP/1.1

host: api.local

content-length: ...

content-type: text/xml

<render>

<lang>en-GB</lang>

<content>Dear ${fullname}

Once upon a time there was an RFC, but nobody could

be bothered to read it.

The end.

</content>

</render>

-------------------------

HTTP/1.1 200 OK

content-length: ...

content-type: text/html

<!DOCYPE...>

<html>

...

<body>

<h1>Dear John D. Anyuser</h1>

<p>Once upon a time...

Well, this method would not change anything on the server, so it is definitely idempotent. First off, neither POST nor PUT would be suitable. The request returns a body, so HEAD is also a no-go. In fact, the only reasonable method to use, is GET. It even makes perfect sense - we execute a "static" method on the server (a pre- configured rendering routine) with no side effects, just like when we request a static document, or a search result. Instead of encoding the document we wish to have transformed in the path of the URI (which would be inconvenient and even impossible with larger texts), we simply supply it in the body of the request - which is perfectly valid and well defined by the HTTP 1.1 RFC. So what is all the fuss about you may ask? Why not just go ahead and do this and be done with it? Well, I did, and as it turns out, XMLHTTPRequest will ignore the body in a request if the method is GET. No I am not kidding and this is not a joke (or at least it is not a very funny one). It is right here.

Yet again I was forced to implement a workaround in an otherwise fairly clean API, to allow for something which I this time completely fail to see the explanation for. I mean, they specifically went to all the trouble of special casing GET so that XMLHTTPRequest specifically would not allow a standard HTTP request - what for? To help us? Please, if that is the case, stop helping. Please just remove the special case from the standard, remove the code necessary to implement this breakage of HTTP support, and thereby allow plain simple RESTful APIs to be used from the browsers that people have. Anyway, the workaround was simple; add another handler so that users can use the "PUT" method (even though that makes NO sense, as nothing gets updated on the server) instead of "GET", thereby bypassing the special case in XMLHTTPRequest that breaks protocol.

Ah... glad I got all this off my chest. I hope you find the workarounds useful, and if you are a browser vendor or otherwise have leverage to influence things, please consider if it would be possible to work towards supporting HTTP in all its beautiful simplicity in the browsers of the future. Thank you.