|

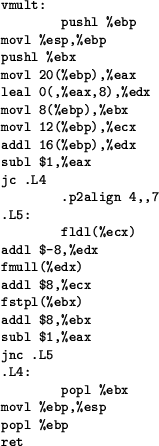

If we look a bit at the extremes, we can take a look at how the vmult() function from the previous example look when it is translated for the CPU to execute. I passed the C code thru an optimizing C compiler, and fetched the assembly output from the compiler.

Translated into real CPU instructions for my PC at home, the code becomes what is seen in Table 3.2.

Note that the tight loop that performs the multiplication takes up eight instructions (it's the code between the two labels .L5 and .L4 above). These eight instructions are executed 1000 times each, when the vmult(A,B,C,1000) call is made.

This is efficient because it runs directly on the CPU. But if we used a language such as Intel 80x86 assembly (like the code above) as the representation language in our virtual machine, we would end up with an extremely slow system. This is because we have an overhead for executing an instruction, on top of what the instruction itself requires (also see section 2.4).

Remember, the virtual machine must first recognize the opcode, then move to the relevant routine in the virtual machine that handles that particular recognized opcode, and then the CPU gets to actually execute the machine-code instructions that performs the action dictated by the recognized virtual machine opcode.

Not only for the performance reasons would it be infeasible to feed the virtual machine code on the form of the assembly language in the previous example. Think about parallelizing such code. The C example given in the previous section may look bad, but the lower the level we see the code at, the worse it gets.

The solution is to define a fairly large set of instructions, that can take care of these trivial tasks, efficiently. For example, the mult instruction used for multiplying vectors, will also perform matrix multiplication. The actual code that does the real work is written in C++ and doesn't look all that much different from the C example presented earlier.

Again the virtual machine is different from a ``real'' (hardware)

machine here. In hardware, it is extremely costly to implement a large

set of instructions. That is why we see the high performance hardware

of today moving away from CISC (complex/complete instruction set

computing) towards RISC (reduced instruction set computing)

architectures instead. In the virtual machine however, a new

instruction has no impact on the efficiency of the virtual

machine (with exception from possible cache pollution due to the

increased code size). Instructions are decoded using a technique

similar to hash-table lookups which are

![]() independently

of the number of instructions. Another megabyte of code in the

virtual machine doesn't really cost us anything with regards to

efficiency, but another million of transistors is a whole other story

for the hardware producers.

independently

of the number of instructions. Another megabyte of code in the

virtual machine doesn't really cost us anything with regards to

efficiency, but another million of transistors is a whole other story

for the hardware producers.

The current set of opcodes implemented in the virtual machine are listed in table 3.2.4.

The arguments passed to the instructions are named dst (short for ``destination'') when the argument is something the instruction will touch (modify). Arguments are named src (short for ``source'') when they are only used.

We will need a much larger set of opcodes, but the current set has been sufficient to implement a number of small test programs that were used for developing the automatic parallelization.

Depending on the opcodes, the arguments can be scalars, vectors, matrices or tuples. For example, the mult opcode implements both scalar integer and floating-point multiplication, element-wise vector multiplication, element-wise tuple multiplication, and matrix multiplication.